GeoWerkstatt-Projekt des Monats Oktober 2019

Projekt: Learning Disparity for Dense Stereo Matching

Forscher: Junhua Kang

Projektidee: Tiefenkarten werden aus stereoskopischen Bildern mit Hilfe von Methoden des maschinellen Lernens schnell und genau berechnet

Dense stereo matching has continuously been an active research area in photogrammetry and computer vision. It is widely used in different applications, such as robotics and autonomous driving, 3D model reconstruction, object detection and recognition. The core task of dense stereo matching is to find pixel-wise correspondences between images, and thus to calculate the parallax (called disparity in computer vision) of corresponding pixels between images.

Traditional stereo algorithms treat disparity estimation as a similarity measurement problem, which is, measuring the similarity between corresponding patches of two or more images. They measure similarity by using hand-crafted matching cost metrics, which often have difficulties in inherently problematic regions, such as texture-less areas, repetitive patterns, occlusions and areas with large disparity changes.

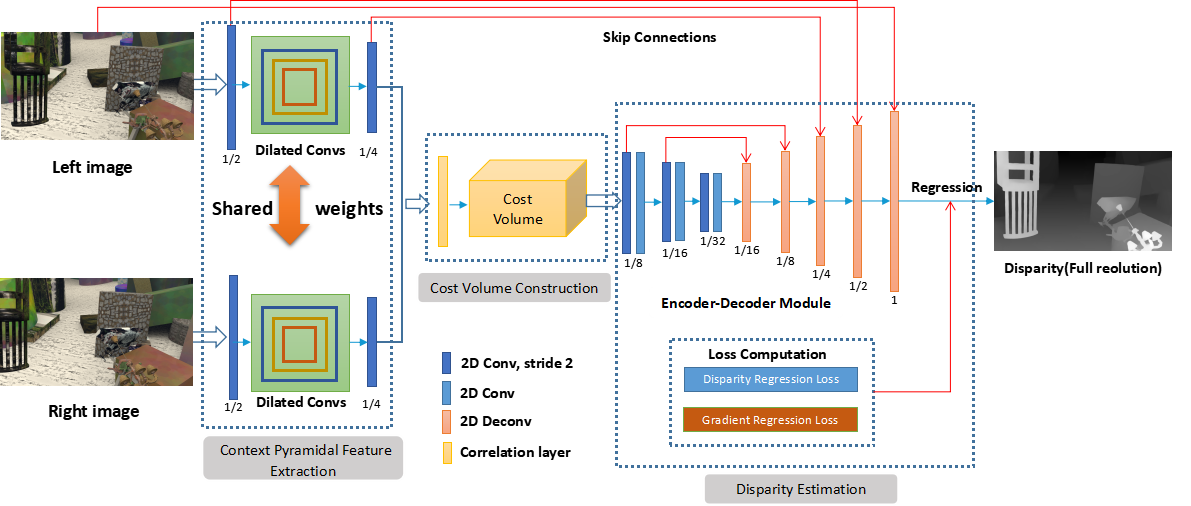

In this project, our goal is to develop an end-to-end deep learning model for directly predicting dense disparity maps without post-processing. We employ deep convolutional networks to formulate dense stereo matching as a pixel-wise learning task. We first develop a context pyramidal feature extraction module to extract multi-scale features. Then, we explicitly encode the correlation information in our model to construct a cost volume, which enables our network to capture correspondences between the stereo pairs. Finally, we employ a deep encoder-decoder module to learn a regularization function and refine our disparity estimation. The pipeline of our research is illustrated in the following figure.

First results have demonstrated the quality of our approach which is on par with other state-of-the-art approaches in most areas and is superior in terms of capturing fine detail.